Hi there!

Welcome to Shibei Meng(孟诗蓓)’s website! I am a third-year master’s student in Computer Science and Technology at Beijing Normal University, supervised by Prof. Saihui Hou and Prof. Yongzhen Huang.

My research lies at the intersection of Computer Vision, Multimodal Large Language Models (MLLMs), and Video Understanding.

Cooperation and discussion are welcomed. Feel free to drop me an email :)

🔥 News

- 2025.07: 🎉 StreamGait & MirrorGait is accepted by ACM MM’25!

- 2025.06: 🎉 OmniDiff & M3Diff is accepted by ICCV’25!

- 2025.06: 🎉 FastPoseGait & GPGait++ is accepted by TPAMI’25!

- 2024.07: 🎉 GaitHeat is accepted by ECCV’24!

- 2023.07: 🎉 GPGait is accepted by ICCV’23!

📝 Publications

Seeing from Magic Mirror: Contrastive Learning from Reconstruction for Pose-based Gait Recognition

Shibei Meng, Saihui Hou, Yang Fu, Xuecai Hu, Junzhou Huang, and Yongzhen Huang

We present MirrorGait, a self-supervised 3D-aware pre-training framework that lifts 2D poses to 3D for large-scale contrastive learning. Trained on curated data from 353 YouTube live-stream channels spanning 30 countries and 81 cities, our method achieves state-of-the-art results on Gait3D, GREW, and OUMVLP-Pose with minimal fine-tuning.

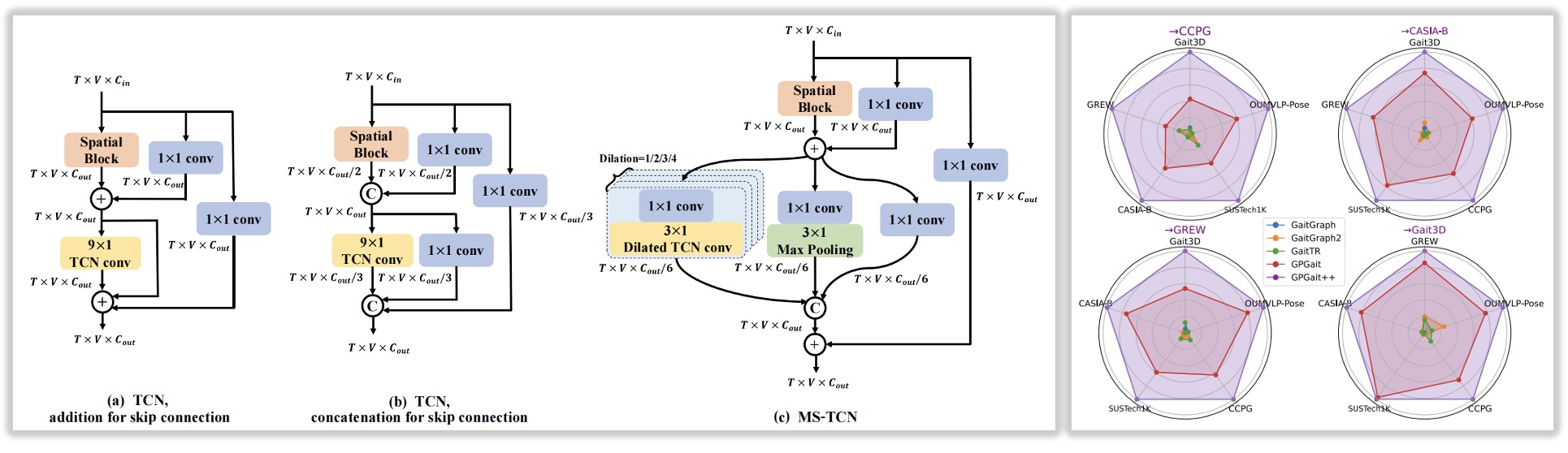

From FastPoseGait to GPGait++: Bridging the Past and Future for Pose-based Gait Recognition

Shibei Meng*, Yang Fu*, Saihui Hou, Xuecai Hu, Chunshui Cao, Xu Liu, and Yongzhen Huang

- FastPoseGait: We developed the FastPoseGait open-source toolbox and used it to establish a fairer, more consistent benchmark for gait recognition algorithms.

- GPGait++: We proposed GPGait++, a new method that significantly outperforms all prior pose-based work in challenging cross-domain scenarios.

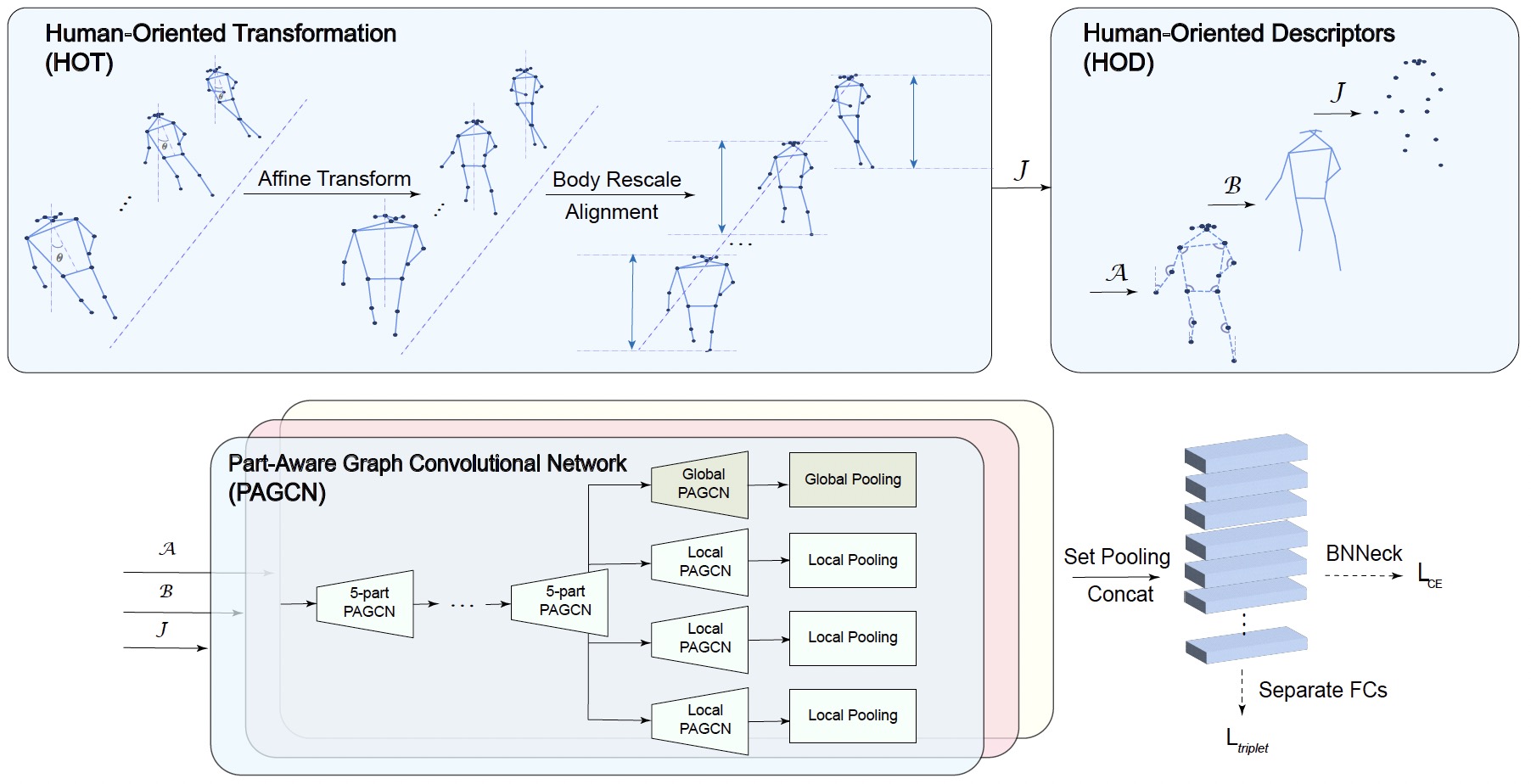

GPGait: Generalized Pose-based Gait Recognition

Yang Fu*, Shibei Meng*, Saihui Hou, Xuecai Hu, and Yongzhen Huang

We introduce GPGait, a framework designed to improve the generalization of pose-based gait recognition across different datasets. To achieve this, it first uses a Human-Oriented Transformation (HOT) to create a unified and feature-rich pose representation. Then, it employs a Part-Aware Graph Convolutional Network (PAGCN) to effectively extract local and global spatial features from the keypoints, making the model more robust and effective in diverse scenarios.

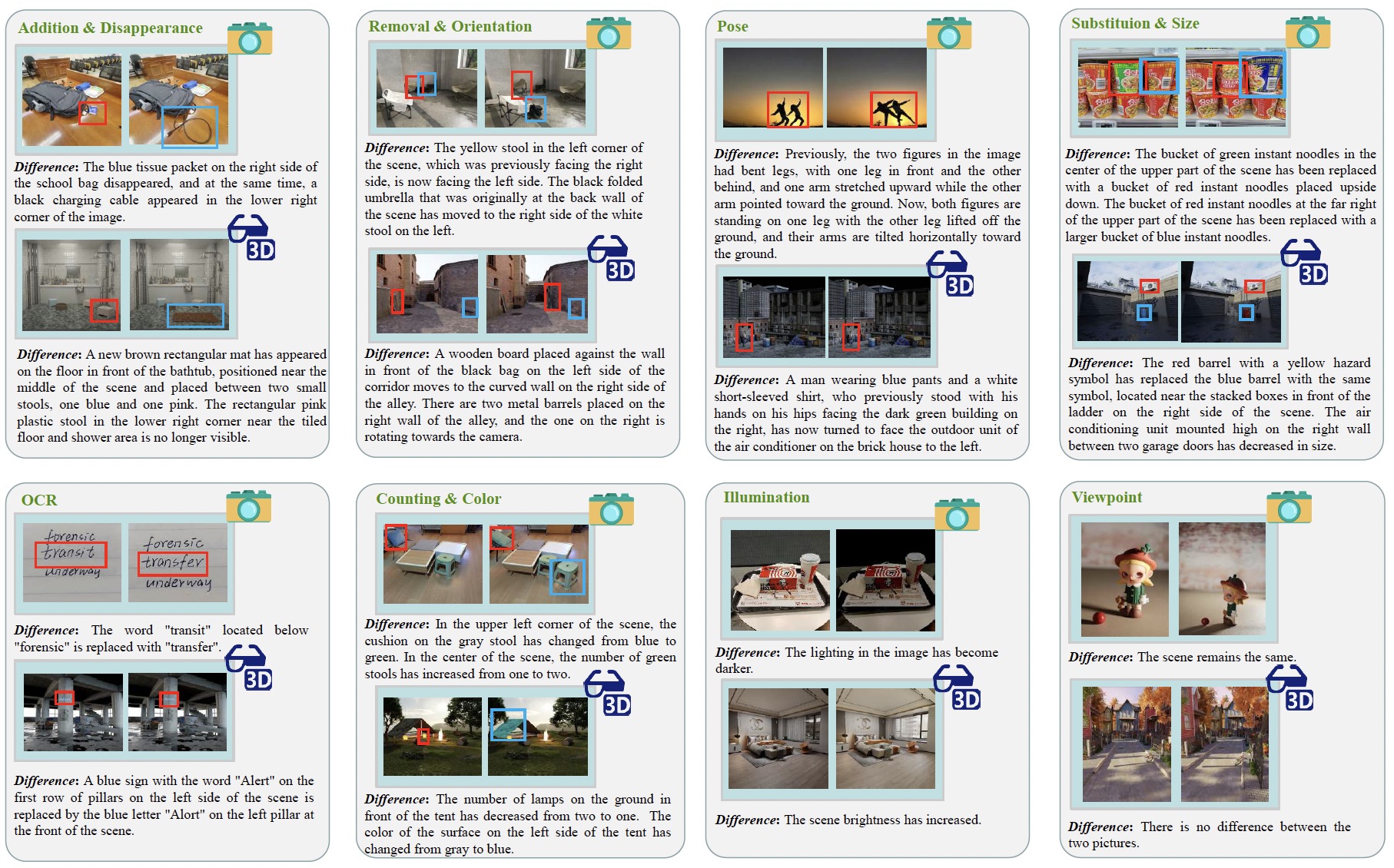

OmniDiff: A Comprehensive Benchmark for Fine-grained Image Difference Captioning

Yuan Liu, Saihui Hou, Saijie Hou, Jiabao Du, Shibei Meng, and Yongzhen Huang

[Paper] [Dataset (coming soon)]

- OmniDiff: We construct OmniDiff, a high-quality dataset comprising 324 diverse scenarios, encompassing both real-world complex environments and 3D synthetic settings.

- M3Diff: We integrate a plug-and-play Multi-scale Differential Perception (MDP) Module into the MLLM architecture, establishing a strong base model specifically tailored for IDC.

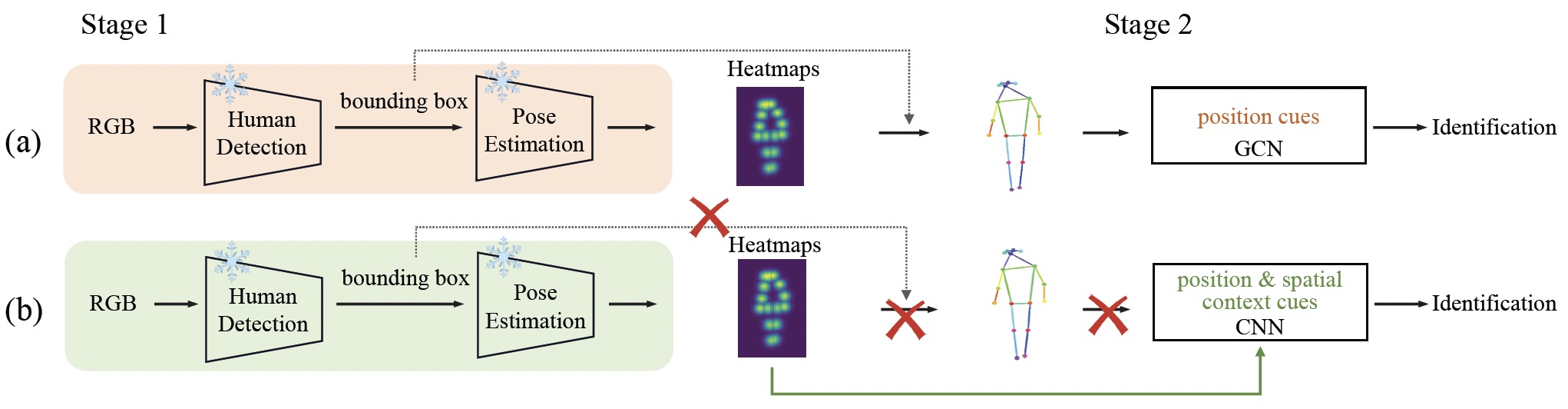

Cut out the Middleman: Revisiting Pose-based Gait Recognition

Yang Fu, Saihui Hou, Shibei Meng, Xuecai Hu, Chunshui Cao, Xu Liu, and Yongzhen Huang

We introduce a new method for gait recognition using heatmaps directly from upstream methods as the primary representation, instead of the more traditional skeletons. It also identifies the key challenges associated with using heatmaps and provides a simple yet non-trivial solution to overcome them.

📖 Educations

- 2023.09 - (now), M.Eng. in Computer Science and Technology, School of Artificial Intelligence, Beijing Normal University.

- 2019.09 - 2023.07, B.Eng. in Computer Science and Technology, School of Artificial Intelligence, Beijing Normal University.

🎖 Honors and Awards

- National Scholarship, 2025.

- First Prize in ACM MM’24 Multimodal Gait Recognition Challenge (Pose Track), 2024.

- Outstanding Undergraduate of Beijing Normal University, 2023.

- Excellent Undergraduate Thesis of Beijing Normal University, 2023.